Denys Golotiuk

Software Engineering Expert

- golotyuk@gmail.com

- +38 (093) 395-53-48

- Kyiv, Ukraine

Welcome 🇺🇦 I’m Denys, a software engineering expert based in Kyiv, Ukraine. Masters in engineering and MBA. I have more than 18 years of experience in building efficient technical teams and delivering software products. Focused on data-intensive infrastructures, heavy loads, and analytical environments. Opensource stack. Technology agnostic.

I create and deliver web (browser-based) software. Typically I deal with control systems (so called, admin panels), integrations and automations of all kinds.

I create systems that transforms lots of randomly structured or unstructured data into accessible analytical products, consumed by analysts, data-engineers, managers and APIs.

I can lead technical teams and make sure results are delivered. My approach is to utilize people strong sides keeping focus on targets and adapt process to keep up with feedback loop.

While ML is overhyped nowdays, it still can serve as an efficient tool for automation and data processing way beyond human capabilities. I can make LLMs do the right job.

Given loads of data we have to deal with, system performance is either overengineered or being sacrifised. Still, performance engineering is something to deal with when time comes.

In the complex process of data flow between storages and humans security and availability has to be designed and implemented. Things like GDPR are meant to improve our systems.

While infrastructure cost is not a big concern on startup stages, it can drain funds really fast during the growing stage. The right balance in cloud solutions and baremetal can help.

Before bringing some value, data has to be collected, cleansed and processed. Building scalable and flexible data feeds and pipelines is critical for further valuable insights.

Though training comes as a part of my management approach, I'm an occasional blogger and like to share my knowledge. I practice training sessions tailored for specific cases.

Sharing my experience and findings on building efficient software engineering teams that deliver things. How to get real and maintain focus on shipping results instead of excuses.

Read More

The simple idea behind machine learning is to describe given data with a math function. Why? Because math functions allow us to get outputs for unknown inputs.

Read More

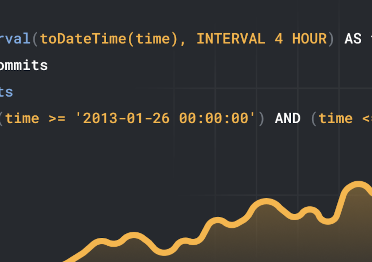

Many datasets are collected over time to analyze and discover meaningful trends. Each data point usually has a time assigned when we collect logs or business events.

Read More